Robotic etiquette

Engineering improved human-robot interaction

Most people don’t know what to do when they first encounter a robot. Some swarm around it. Some stand back. And some misinterpret its behavior. That’s what happened to Leila Takayama, UC Santa Cruz associate professor of computational media, when she first met PR2 (Personal Robot 2), a social robot built by the robotics company Willow Garage.

When she walked through the front door of the company’s Palo Alto headquarters, the PR2 rolled up to her and paused. She expected a greeting, but it spun its head around and ran away. “I felt blown off, but that doesn’t make sense,” Takayama said. “It was just trying to get from point A to point B and was replanning its path, but I couldn’t help but feel insulted.”

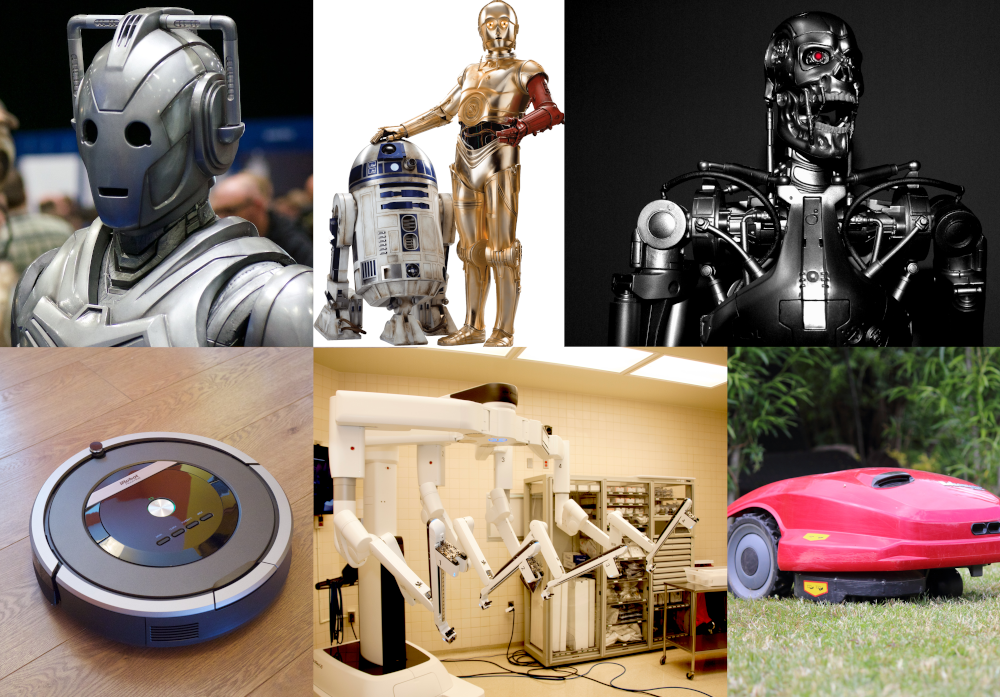

It’s natural to misread a robot’s intentions, both because of how robots are currently designed and also our usually inflated expectations of what they can do, said Takayama, who aims to improve both sides of this stilted relationship with her research on human-robot interaction. This growing field of study, driven by rapid advances in both artificial intelligence and robotics engineering, employs a broad set of disciplines to explore a wide range of challenging subjects, from how humans work with remote, tele-operated unmanned vehicles and surgical robots, to self-driving vehicles and collaborations with anthropomorphic social robots.

While personal computers have become much more user-friendly over time, that’s not the case for robots. “They’re still in the dark ages, in terms of being usable and useful for normal people. I felt like that’s a pretty big problem that could be addressed with better human-centered design and research,” Takayama said.

Bad manners

In the next several decades, we’ll likely see a wide range of robots ambling about our hospitals, homes, workplaces, and groceries, as well as on sidewalks and in the street, where autonomous cars count as robots, too. Prototypes of those future robots already wander about some cities, especially in the Bay Area. But today’s robots lack the subtle common sense we humans take for granted every day as we navigate our social world. And without this common sense in robots, humans get confused. Takayama’s broad background in the social sciences, cognitive science, behavioral science, and psychology, comes in handy here, giving her insights that engineers might not recognize as relevant and important.

For example, in order to conserve power, engineers typically build robots to be efficient with their motions. But that can be a problem, said Wendy Ju, an assistant professor of information science at Cornell University and frequent Takayama collaborator. “The unintended effect is that robots seem stiff, even kind of snotty. Robots need to move around to seem natural and make people feel more comfortable. That’s something that only someone who studies people would think of,” she said.

To improve robot manners, Takayama and her team are working to help them take cues from people. When a robot trundles into an elevator, it typically does the selfish thing and sits smack in the center, leaving little room for human passengers. The same happens in hospital corridors, where robots bother staff by hogging space. “Most humans know it’s not polite to do that. We use interactive behavior programming to help people help robots do the right thing—it’s basically teaching them social skills,” Takayama said.

As might be expected, people warm up to robots that behave more like people. In one collaboration, Takayama is studying people’s perceptions of robots that spend time exploring, in addition to performing a primary task. The findings of this work, presented in March 2020 at a human-robot interaction conference, showed that people often have positive views of these “curious” robots, but assessed competence drops when these robots deviate too far from their task.

In an earlier project, Takayama tapped an expert animator to help generate movements for a robot that allowed people to understand its intentions, to open a door or pick up a bottle, for instance. “Takayama showed how a robot can be incredibly expressive,” said Anca Dragan, an assistant professor of electrical engineering and computer sciences at UC Berkeley. Dragan was developing algorithms that enable robots to work with, around, and in support of people, and Takayama’s demonstration inspired her to work on robots to autonomously have expressive motions: “I ended up asking is there a way for the robot to come up with those motions itself?”

Ultimately, if robots behave more expressively, people can better understand what they’re doing and anticipate what they’re going to do. This has important consequences for self-driving cars, Dragan said, since the way robots drive needs to be consistent with human driving, and both need to anticipate the other to avoid accidents.

Human side

Importantly, when humans and robots both learn, both benefit. Human-robot interactions improve when people have more informed and realistic expectations of a robot’s abilities, said Laurel Riek, an associate professor of computer science and emergency medicine at UC San Diego.

In a recent study, which supports the findings in Takayama’s work, Riek asked people to collaborate with a mobile robot to cooperatively hang a large banner. Unbeknownst to participants, the robot was intentionally programmed to make mistakes, such as dropping its end. Some participants were told the robot might malfunction, and they responded differently from others who received no warning. “The participants in the low-expectation setting recovered more quickly from the errors, regaining trust in the robot and improving their perceptions of its reliability,” Riek said.

The implication is that people’s unrealistic expectations can hamper their interactions with robots. The videos they see online, such as the popular YouTube clips of the robots built by Boston Dynamics, give the wrong impression, Takayama said. “I wish they’d show more of the blooper reels, the 199 takes before the demo finally worked. This stuff is hard. Robots are actually not that capable,” she said.

These outsized expectations also have important implications for the robot-enabled future, beyond their immediate impact on human-robot interactions. People have preconceived notions about robots shaped by decades of media-fueled hope, fear, and hype. Early roboticists envisioned anthropomorphic robots with broad, human-like functionality, a concept now firmly planted in the minds of most people. It’s now clear, however, that robots work best when they’re specialized, which translates to myriad different designs depending on the robot’s main task. The faceless robot that stocks the shelves will be strikingly different from the one with the friendly face working the cash register.

The image problem is exacerbated by the media portrayals of humanoid robots that frequently give the impression of much broader capability than is actually the case, Takayama said. Such robots typically disappoint when encountered in real life, offering just a highly articulated face and not much else. These false impressions contribute to the hype that surrounds and damages the field, Takayama said. “If you’re going to make it look like a human, you should make it live up to those expectations,” she said. “Why not make a Roomba instead?”

Portrayals of robots in popular culture have also contributed to the misconceptions. East Asian science fiction, like the Japanese series Astro Boy, has promoted both hope and fear about artificial intelligence and robots. More recent Western movies like Robot & Frank and series like Westworld ask nuanced questions about potential relationships with robots, but robot portrayals in earlier ones, like Hal in 2001: A Space Odyssey, primarily warned about the potential dangers of a robotic future, Takayama said.

Takayama and her colleagues are hard at work trying to shape a robotic future that reflects the best of humanity. “We should be thinking harder about what robots we should be building, not just what robots we could be building,” she said. “Just because you can build it, is that the future we want? That’s the real question.”